A lot of my non tech friends, and honestly some of my tech friends, think AI is basically magic. You send a prompt and it replies with code, explanations, or entire essays. It looks intelligent. But under the hood, Large Language Models (LLMs) are not thinking they are predicting.

This post breaks down how LLMs work in simple terms and shows a small C# example so you can connect theory to something concrete.

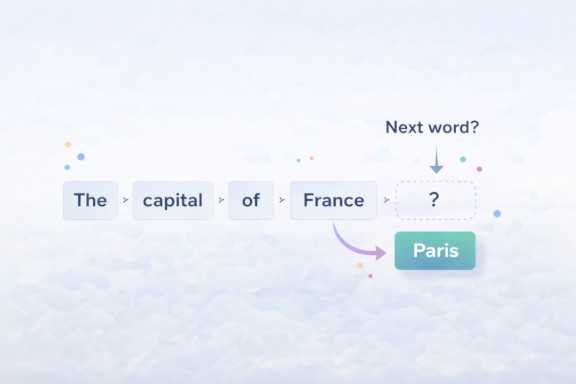

The core idea: prediction, not intelligence

An LLM is essentially a very advanced text prediction engine.

When you type:

"The capital of France is"

The model predicts the most likely next token:

"Paris"

Then it predicts the next token after that, and so on.

That's it.

Everything impressive about modern AI comes from how good the model is at predicting what comes next.

Not reasoning. Not consciousness. Prediction at massive scale.

What is a "token"?

Models don't see text the way humans do. They break text into small units called tokens.

A token can be:

- a word

- part of a word

- punctuation

- or a special symbol

For example:

"I love programming"

might become tokens like:

["I", " love", " program", "ming"]

The model predicts one token at a time.

Think of it as autocomplete, but trained on enormous amounts of text, think huge chunks of the internet, books, articles, and code, and refined to understand patterns, structure, and intent.

Training: how the model learns

During training, the model sees billions (sometimes trillions) of text sequences.

For each sequence, it repeatedly does this:

- Hide the next token

- Try to predict it

- Compare prediction vs reality

- Adjust internal weights

Over time, it builds a statistical map of language.

It doesn't "know" facts the way humans do. Instead, it learns patterns like:

- which words tend to follow others

- how code is structured

- how arguments are built

- how explanations flow

This is why it can write essays, generate code, or answer questions. It has learned the shape of language.

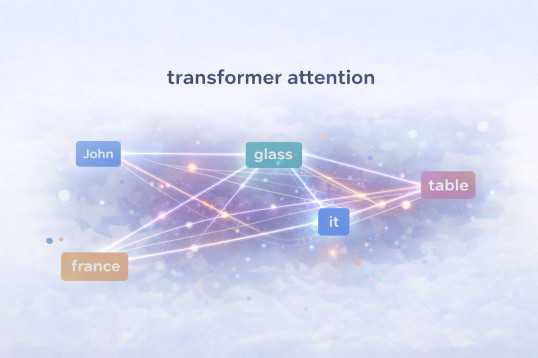

The architecture: transformers in plain English

Modern LLMs are based on something called a transformer architecture.

You do not need the maths to get the idea. A transformer is basically a system that looks at all the words in a sentence at once and decides which ones matter most to each other.

Instead of reading left to right like older models, transformers use a mechanism called attention. Attention lets the model weigh relationships between words. It asks questions like:

- which earlier words are important to this word?

- what context should I remember?

- what can I safely ignore?

That attention system is what allows the model to keep track of meaning over long passages instead of just short phrases.

If you write:

"John put the glass on the table. It broke."

The model learns that "it" refers to the glass, not the table.

This context tracking is what makes LLM responses feel coherent and intelligent.

Inference: how responses are generated

When you send a prompt to an LLM:

- Your text is converted into tokens

- Tokens pass through many neural layers

- The model outputs probabilities for the next token

- A token is chosen

- The new token is added to the sequence

- Repeat

This loop runs extremely fast.

The result is a flowing stream of predictions that form a response.

A simple mental model

Here's a useful analogy:

An LLM is like a musician who has listened to every song ever written.

It doesn't remember songs word for word.

Instead, it understands patterns of rhythm, melody, and structure, and can generate new music that follows those patterns.

Language models do the same thing with text.

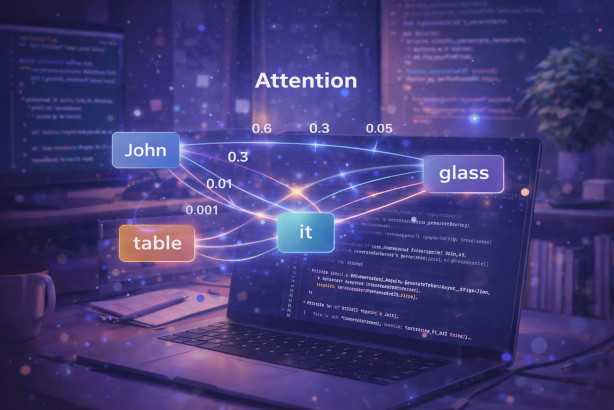

A practical C# example: a tiny transformer attention demo

We are not going to build an LLM in a blog post. But we can show one of the core transformer ideas in a way you can actually run.

Below is a tiny C# demo of attention. It uses a toy embedding setup where the token "it" ends up most similar to "glass", so the attention weights should rank "glass" highly.

This is not a real model. In real transformers, embeddings and the Q, K, V matrices are learned and there are many layers. This is just the core idea made tangible.

using System;

using System.Collections.Generic;

using System.Linq;

public static class TinyAttentionDemo

{

public static void Run()

{

// Tiny token list for the example sentence.

// We are not doing real tokenization here. This is just a simple demo.

var tokens = new[] { "john", "put", "the", "glass", "on", "the", "table", "it", "broke" };

// Hand made embeddings. The only goal is to make "it" most similar to "glass".

// In a real transformer these embeddings are learned.

var emb = new Dictionary<string, double[]>

{

["john"] = new[] { 0.1, 0.0, 0.0, 0.0 },

["put"] = new[] { 0.0, 0.1, 0.0, 0.0 },

["the"] = new[] { 0.0, 0.0, 0.1, 0.0 },

["glass"] = new[] { 0.9, 0.1, 0.0, 0.0 }, // "object"

["on"] = new[] { 0.0, 0.0, 0.0, 0.1 },

["table"] = new[] { 0.2, 0.8, 0.0, 0.0 }, // different direction

["it"] = new[] { 0.85, 0.15, 0.0, 0.0 }, // close to "glass"

["broke"] = new[] { 0.7, 0.2, 0.0, 0.0 }, // action related to object

};

// In real transformers, Q K V are produced by multiplying embeddings by learned weight matrices.

// For a tiny demo, we will treat embedding as Q and K directly.

int queryIndex = Array.IndexOf(tokens, "it");

var q = emb[tokens[queryIndex]];

// Scores are dot products between Q and every K.

var scores = tokens.Select(t => Dot(q, emb[t])).ToArray();

// Softmax converts scores into probabilities (attention weights).

var weights = Softmax(scores);

Console.WriteLine("Query token: \"it\"");

Console.WriteLine();

// Print weights as a ranked list.

var ranked = tokens

.Select((t, i) => new { Token = t, Weight = weights[i] })

.OrderByDescending(x => x.Weight)

.ToList();

Console.WriteLine("Attention weights (highest first):");

foreach (var item in ranked)

Console.WriteLine($"{item.Token,-6} {item.Weight:0.000}");

Console.WriteLine();

Console.WriteLine("Interpretation:");

Console.WriteLine("The token \"it\" pays most attention to whichever earlier token gets the highest weight.");

Console.WriteLine("In this toy example, that should be \"glass\".");

}

private static double Dot(double[] a, double[] b)

{

double sum = 0;

for (int i = 0; i < a.Length; i++)

sum += a[i] * b[i];

return sum;

}

private static double[] Softmax(double[] x)

{

// Basic stable softmax

var max = x.Max();

var exp = new double[x.Length];

double sum = 0;

for (int i = 0; i < x.Length; i++)

{

exp[i] = Math.Exp(x[i] - max);

sum += exp[i];

}

for (int i = 0; i < x.Length; i++)

exp[i] /= sum;

return exp;

}

}

public static class Program

{

public static void Main()

{

TinyAttentionDemo.Run();

}

}

Why LLMs feel intelligent

They feel smart because they:

- capture patterns across massive datasets

- maintain context across long sequences

- generate statistically coherent responses

But they are not reasoning like humans.

They are predicting the most plausible continuation.

That prediction just happens to look like intelligence.

AI is simple. Scale is the hard part.

Conceptually, the core mechanism behind an LLM is surprisingly simple: predict the next token.

What makes modern AI powerful is not a magical algorithm. It is scale.

Scale in:

- training data

- model size

- compute power

- infrastructure

- energy consumption

A small prediction model is easy to build. A planet scale prediction engine that responds in milliseconds is not.

Running large models requires enormous GPU clusters, high-end networking, cooling, and continuous maintenance. Every prompt you send triggers real compute cost (you'll notice this pain if you've ever run a model locally). There is no "free thinking" happening in the background someone is paying for electricity, hardware, and bandwidth.

This is why the economics of AI are unusual. The smarter and larger the model becomes, the more expensive it is to operate. Unlike traditional software, inference has a real marginal cost per request.

That raises a practical question for the industry: how long can generous free tiers exist?

Free access has helped adoption explode, but long-term sustainability depends on balancing user growth with infrastructure cost. As models grow more capable, the pressure to monetise increases.

In other words, AI is simple in theory, but brutally expensive in practice. That tension will shape how these tools evolve.

Where this leaves you as a developer

Understanding this changes how you use AI:

- prompts become instructions for prediction

- structure matters

- examples improve output

- clarity beats cleverness

You're not talking to a mind.

You're shaping probabilities.

And once you understand that, AI becomes a tool you can reason about instead of magic you hope works.

Final thought

LLMs are not artificial brains.

They are probability engines trained on language.

But language is such a rich system that mastering it looks like intelligence.

And that's why this technology feels like a leap not because machines are thinking, but because prediction at scale is powerful enough to mimic thought.

That's the real breakthrough.

By the way this post was created using ChatGPT, prompted with love by Darran 😂